Machine Learning at scale, what about runtime performance?

Training ML models is only the first step, when you need to deploy and operate them at scale, you need to ask yourself what are the options to execute the inference in the most efficient way

Python is becoming increasingly popular for use in Machine Learning, due to its versatile nature and wide range of libraries. The syntax is intuitive and easy to learn, making it a great choice for data scientists of any skill level.

Python can be used for data analysis, visualization, and machine learning, allowing data scientists to quickly generate insights from their data. Additionally, Python is an open-source language, giving data scientists access to many powerful and useful libraries for free, including of course scitkit-learn.

And let’s be honest, Python is a great language, even for software Engineers for the same type of reasons. But is Python the best choice to run end-to-end Machine Learning at scale, especially when you need to operate in “real-time” and/or under heavy load? Let’s discuss the 2 main options in front of us when we want to deploy/operate ML, also known as MLOps.

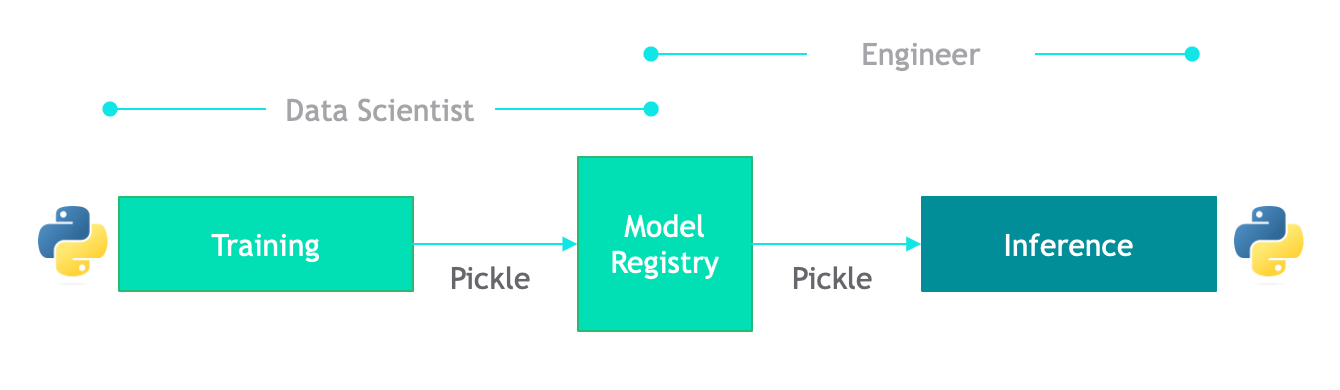

When your model is ready, meaning is fully approved by the Data Scientist and the business stakeholders, it is time to involve ML Engineers, Data Engineers, … to create the end-to-end solution to give it life and make it operational. And the very high-level picture to represent this pipeline can be the following one:

When this type of deployment is working fine and sort of easy to set up, even in real life, we can quickly see some pain points:

Usage of Python for the inference, when it is well known that Python is not the most efficient/performant language

Acceptable if your model is rarely executed but what about a model used a thousand times per minute?

Usage of pickle as a format forces us to stick to Python

And even scitkit-learn informs us about the risk of using this type of serialization for the models

So, what are our options to improve the situation? The easiest is probably to read a few lines below in the same scikit-learn documentation page and follow the link about ONNX !

ONNX (Open Neural Network Exchange) is an open format for representing ML models that offer several advantages, the first one is to easily use models between different frameworks (Pytorch, …) by using a common definition of what is a model and the associated metadata, allowing easier deployment and monitoring.

And the second benefit is to be well optimized for performance on various hardware such as CPUs and GPUs, allowing the execution of the model with the best performance possible on any type of hardware.

In a nutshell, a model trained and then saved following the ONNX format can be exported and used on various platforms while potentially becoming more performant in the operation, sounds too good to be true!

Let's put into practice a really simple scenario

How can we leverage ONNX inside our previous pipeline, without impacting the work of the Data Scientist while improving the overall system? By switching to ONNX, we will increase interoperability with existing solutions and improve performance.

With minimal changes at the end of the work of the data scientist (saving their model to the ONNX format), we are opening a wide range of possibilities for the rest of our solutions.

Are you already using Java or .Net as your standard tech stack? do you want a fancy micro-service in Rust? all these options are now open to the engineering team, the integration work will be reduced and the performance will be improved, even if using still Python!

By using onnx-runtime and not anymore the scikit-learn one, we can already appreciate a major boost in performance. Depending on the type of model we can easily observe an x4 increase, meaning more inference on the same hardware.

And if you are switching to another language like Rust, for example, you will have all the benefits of the onnx-runtime AND the strong performance and stability of Rust.

Basically, you will be able to provide much more inference results to your users for much less infra usage so reducing your cloud bill and by direct link, your carbon footprint, which is always a good bonus!

Let’s get your hands dirty and write some code to benchmark

Let's start with the training part, we will focus on the Iris sample and save the model under the two formats, pickle to load it with scikit-learn and ONNX to load it with the associated runtime.

from sklearn.datasets import load_iris

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import train_test_split

import pandas as pd

import pickle

import skl2onnx

from skl2onnx.common.data_types import FloatTensorType

def train_model() -> RandomForestClassifier:

data = load_iris()

df = pd.DataFrame(data.data, columns=data.feature_names)

X_train, X_test, y_train, y_test = train_test_split(df, data.target, test_size=0.2, random_state=1) # Split into train/test

model = RandomForestClassifier()

model.fit(X_train, y_train)

return model

def save_pickle(model: RandomForestClassifier):

with open("rf_iris.pkl", "wb") as f:

pickle.dump(model, f)

def save_onnx(model: RandomForestClassifier):

onnx_model = skl2onnx.convert_sklearn(model, 'random_forest', [('input', FloatTensorType([1, 4]))])

with open("rf_iris.onnx", "wb") as f:

f.write(onnx_model.SerializeToString())

model = train_model()

save_pickle(model)

save_onnx(model)Then we will build a simple API, able to expose the model, with a very simple environment variable switcher:

import pickle

import os

import logging

from fastapi import FastAPI

from pydantic import BaseModel

import onnxruntime as rt

import pandas as pd

import numpy

class Iris(BaseModel):

sepal_length: float

sepal_width: float

petal_length: float

petal_width: float

api = FastAPI()

if os.environ.get("ONNX", None):

model = rt.InferenceSession("rf_iris.onnx")

input_name = model.get_inputs()[0].name

label_name = model.get_outputs()[0].name

logging.info("Model loaded with ONNX Runtime")

else:

with open("rf_iris.pkl", "rb") as f:

model = pickle.load(f)

logging.info("Model loaded with Scikit-learn runtime")

@api.post("/infer")

async def infer(iris: Iris):

if os.environ.get("ONNX", None):

# using onnxruntime

input_df = pd.DataFrame([iris.__dict__])

pred_onx = model.run(

[label_name], {input_name: input_df.values.astype(numpy.float32)}

)[0]

return pred_onx.tolist()[0]

else:

# using scikit-learn

input_df = pd.DataFrame([iris.__dict__])

input_df.columns = [

"sepal length (cm)",

"sepal width (cm)",

"petal length (cm)",

"petal width (cm)",

]

pred_sk = model.predict(input_df)

return pred_sk.tolist()[0]We can start the API with scikit-learn by using:

uvicorn infer:apiAnd by using ONNX with

ONNX=True uvicorn infer:apiTo simulate some usage on our API, we will use Locust, a very simple and useful tool in Python to create stress tests in a few lines of code.

We will simply, query our API in a loop by simulating 10 users in parallel.

Let’s start with scikit-learn:

Then let’s restart the API with the ONNX runtime to compare:

So without more than a few lines of Python changed to leverage ONNX, we are already 6 times faster, not bad if we take into account the very light cost to switch to ONNX.

And let’s not forget about the interoperability we are getting by using ONNX, let’s try the same very simple server but in ASPNetCore and C#. here is the HTTP handler, I am skipping the few lines of C# needed to start the server:

[HttpPost]

public OkObjectResult Post(PetalInfo data)

{

var result = _session.Run(new List<NamedOnnxValue>

{

NamedOnnxValue.CreateFromTensor("input", data.AsTensor())

});

var score = result.First().AsTensor<long>();

var prediction = score.First();

result.Dispose();

return Ok(prediction);

}let’s compare the performance with the two previous API:

Having the ability to use other languages than Python for the runtime is opening a lot of options to integrate with existing systems and solutions and yes, probably will allow you to be even faster because Python is powerful and flexible but probably not the best in terms of pure performance.

We are here talking about 8 times faster compared to the scitkit-learn runtime!

All that to say what?

When operating Machine Learning models at scale, you need to be flexible in terms of tooling, taking into account multiple parameters including the ability to integrate with existing solutions and to provide the best performance possible to your users. The combination of accurate models and speed of deployment/execution is the key to a proper and complete MLOps solution.

By using common and standard technologies like .Net or Java, you will ease the integration of your models while leveraging your existing landscape of technologies as much as possible, creating a real synergy between your data science teams and operational teams.

Does that mean moving to ONNX and/or another language is always needed? Of course not, but it is always important to know the options in front of us and to select the best mix of technologies based on the requirements and each team member's skillset.